Autoscaling takes charge of creating and destroying the instances that provide a particular application service. These instances make up an Autoscaling Group (ASG).

We must tell the Autoscaling Group what type of image we wish to have deployed, how large will that image be and other configuration information. These instructions are provided in a AWS Launch Configuration which is used by the ASG.

This article will cover how we might create a custom AMI image that will be invoked by the ASG when a new instance is needed for the service.

Approaches

There are several approaches to achieving the goal of automatically starting up instances when the ASG decides it’s time.

First, we might be able to use a standard Amazon AMI or Amazon Marketplace AMI that is ready to go when it finishes starting up. This would be easy to implement, but rarely completely satisfies enterprise needs for particular services. More often, at minimum, we need to apply custom configuration options when and instance is started.

A second, more complete approach, would be to use some sort of standard or custom AMI and then use a Configuration Management tool to fix the instance up and ready for service.

This is often a good approach. It requires us to find a trigger to begin the configuration on a new instance. AWS provides messaging that indicates autoscaling events, such as new instances being invoked. When a monitoring application sees a new instance, it can invoke the Configuration Manager to work on the new instance.

Another approach to invoking configuration is to put start up scripts on a custom AMI that will instruct the Configuration Management tool to start applying configuration to itself.

Our Approach

For this example, we’ll show a simpler variation of the self initializing AMI that results in instances that fix themselves up when they are invoked.

Let’s Get Started

If you haven’t already done so, download and install Packer from: https://www.packer.io/downloads.html

Also Ansible: http://docs.ansible.com/ansible/latest/intro_installation.html

You can find the code we’ll use for this approach here:

https://github.com/mikejmoore/simple-ami-asg-example

Clone the code with:

> git clone git@github.com:mikejmoore/simple-ami-asg-example.git

Creating the AMI

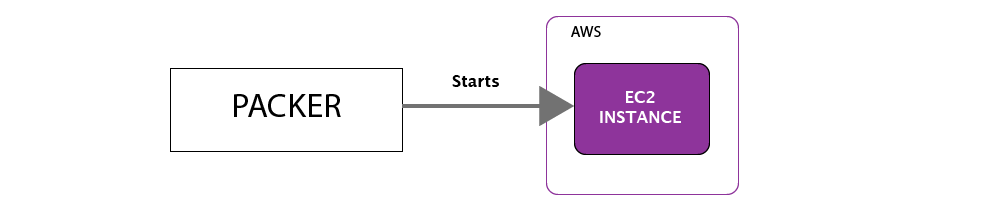

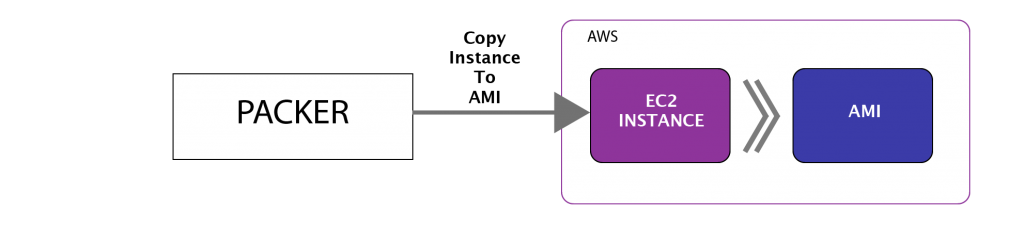

We will use Packer to aid in the operations necessary to place a custom AMI in AWS.

Packer first starts up an instance in AWS. It uses a base AMI that is specified in it’s configuration file.

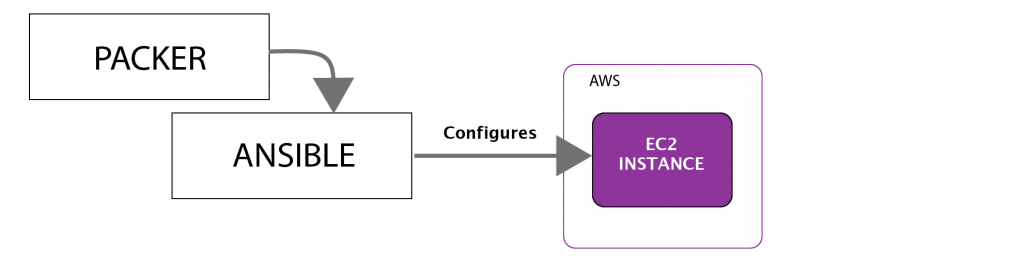

Packer then calls a configuration tool to install needed services and perform configuration. When the provisioning is finished, Packer snapshots the image as an AMI.

Our example will use Ansible to perform the configuration of the image before Packer saves it as an AMI.

Packer starts an EC2 instance based on you choice of a base AMI. The base AMI can be something like Amazon Linux.

Packer then directs Ansible to start it’s configuration of the instance. For our example, Ansible will install NGinx.

Packer then directs AWS to save that running instance as an AMI and performs cleanup.

To kick off this AMI creation process:

> cd simple-ami-asg-example/packer_ami_build/ > packer build -var-file ./variables.json ./build_ami.json

The -var-file params specifies a file where variables are defined. The Packer documentation shows a couple other ways variables can be defined.

When the Packer build finishes, we should see a new AMI in the AWS console. Navigate to the EC2 service and click the AMIs link on the side panel.

You should see listed: “custom-nginx-image”

This image was tagged with several values that will aid in Terraform finding the image when it builds the infrastructure.

Creating the Infrastructure

Now we will use Terraform configuration to create the networking, security groups and the autoscaling group for the NGinx service.

Inside of the terraform_infrastructure directory, the main.tf file provides the configuration for the new infrastructure. This file does have documentation written in-line to explain the purpose of each section of this Terraform file. One section of note, is the “aws_launch_configuration” which the autoscaling group uses to decide how to launch EC2 instances that make up the autoscaling service. This section tells the autoscaler to use the nginx AMI that we created during the first part of this example.

resource "aws_launch_configuration" "nginx" {

name = "example-1-launch-group"

image_id = "${data.aws_ami.nginx.id}"

instance_type = "t2.micro"

user_data = "${file("./files/user_data.sh")}"

security_groups = ["${aws_security_group.nginx_instance.id}"]

associate_public_ip_address = true

key_name = "${var.key_pair}"

}

The User Data Hook

Within the launch configuration is the “user_data” attribute, this our hook to launch a script that will be invoked by an EC2 instance as it finishes initialization. This example copies in the relative local file: file/user_data.sh. You can inspect this file and see that this file produces a Nginx static content text file that includes the host name when the appropriate address is hit by a browser.

Let’s run Terraform and inspect the resulting infrastructure:

> cd terraform_infrastructure > terraform init > terraform get > terraform apply

Terraform will require a few minutes to install the infrastructure. Terraform initializes the autoscale group to initially start two Nginx instances.

Terraform finishes, but the instances that will answer web requests may not be ready yet. You can monitor the status of the instances by using the AWS console and navigating to the EC2 service and choosing “Instances” on the left hand panel.

When the EC2 instances become active, we can test our infrastructure. At this point, the infrastructure should be ready for web requests. We placed a load balancer in front of the Nginx instances and the DNS name of this load balancer can be entered as an address into a web browser.

To find the DNS name of the load balancer: navigate the AWS console to EC2 service. On the left side, click the Load Balancers link in the left-hand column. Find the “nginx-load-balancer” created by this example and view it’s details. Find the DNS name field and copy that value to your browser. This load balancer end point should be something like “nginx-load-balancer-3453423481.us-west-2.elb.amazonaws.com”.

You can append /identity.txt to the end of the endpoint address to see information that proves that the instances self-initialized. Each instance should report their own host name, which resembles the instances private IP address.

You can repeatedly refresh your browser to see that two different EC2 Nginx instances take turns fulfilling web requests. You can also curl (replace the load balancer address with your own load balancer’s address):

curl http://nginx-load-balancer-3453423481.us-west-2.elb.amazonaws.com/identity.txt AUTOSCALING EXAMPLE 1. I am ip-10-0-41-198

You can also curl a hundred times with. You should see two different instance sending back their host names:

curl http://nginx-load-balancer-3453423481.us-west-2.elb.amazonaws.com/identity.txt?a=[1-100]

The point of the way we did self initializing in the user_data of the launch configuration, is that this user_data.sh script could perform just about any action as the instance finishes bootstrapping. Examples of what you might do with the user_data script include:

- Call other scripts during instance boot.

- Install additional software.

- Copy application artifacts from an S3 bucket or other location.

Summary

Many times when we use autoscaling in our AWS infrastructure, we need to install or configure services on the instances as they start up. This example used one technique that use a custom AMI and then places initialization in a user_data script. This is one of several initialization approaches for successful autoscaling.